17.3 Memory networks with spiking neurons

The Hopfield model is an abstract conceptual model and rather far from biological reality. In this section we aim at pushing the abstract model in the direction of increased biological plausibility. We focus on two aspects. In Section 17.3.1 we replace the binary neurons of the Hopfield model with spiking neuron models of the class of Generalized Linear Models or Spike Response Models; cf. Chapter 9. Then, in Section 17.3.2 we ask whether it is possible to store multiple patterns in a network where excitatory and inhibitory neurons are functionally separated from each other.

17.3.1 Activity of spiking networks

Neuron models such as the Spike Response Model with escape noise, formulated in the framework of Generalized Linear Models, can predict spike times of real neurons to a high degree of accuracy; cf. Chapters 9 and 11. We therefore choose the Spike Response Model (SRM) as our candidate for a biologically plausible neuron model. Here we use these neuron models to analyze the macroscopic dynamics in attractor memory networks of spiking neurons.

As discussed in Chapter 9, the membrane potential of a neuron embedded in a large network can be described as

| (17.33) |

where summarizes the refractoriness caused by the spike afterpotential and is the (deterministic part of the) input potential

| (17.34) |

Here denotes the postsynaptic neuron, is the coupling strength from a presynaptic neuron to and is the spike train of neuron .

Statistical fluctuations in the input as well as intrinsic noise sources are both incorporated into an escape rate (or stochastic intensity) of neuron

| (17.35) |

which depends on the momentary distance between the (noiseless) membrane potential and the threshold .

In order to embed memories in the network of SRM neurons we use Eq. (17.27) and proceed as in Section 17.2.6. There are three differences compared to the previous section are: First, while previously denoted a binary variable in discrete time, we now work with spikes in continuous time. Second, in the Hopfield model a neuron can be active in every time step while here spikes must have a minimal distance because of refractoriness. Third, the input potential is only one of the contributions to the total membrane potential.

Despite these differences the formalism of Section 17.2.6 can be directly applied to the case at hand. Let us define the instantaneous overlap of the spike pattern in the network with pattern as

| (17.36) |

where is the spike train of neuron . Note that, because of the Dirac -function, we need to integrate over in order to arrive at an observable quantity. Such an integration is automatically performed by each neuron. Indeed, the input potential Eq. (17.34) can be written as

| (17.37) | |||||

Thus, in a network of neurons (e.g. ) which has stored patterns (e.g., ) the input potential is completely characterized by the overlap variables which reflects an enormous reduction in the complexity of the mathematical problem. Nevertheless, each neuron keeps its identity for two reasons:

(i) Each neuron is characterized by its ‘private’ set of past firing times . Therefore each neuron is in a different state of refractoriness and adaptation which manifests itself by the term in the total membrane potential.

(ii) Each neuron has a different functional role during memory retrieval. This role is defined by the sequence . For example, if neuron is part of the active assembly in patterns and should be inactive in the other 1996 patterns, then its functional role is defined by the set of numbers and otherwise. In a network that stores different patterns there are different functional roles so that it is extremely unlikely that two neurons play the same role. Therefore each of the neurons in the network is different!

However, during retrieval we can reduce the complexity of the dynamics drastically. Suppose that during the interval all overlaps are negligible, except the overlap with one of the patterns, say pattern . Then the input potential in Eq. (17.37) reduces for

| (17.38) |

where we have assumed that for . Therefore, the network with its different neurons splits up into two homogeneous populations: the first one comprises all neurons with , i.e., those that should be ‘ON’ during retrieval of pattern ; and the second comprises all neurons with , i.e., those that should be ‘OFF’ during retrieval of pattern .

In other words, we can apply the mathematical tools of population dynamics that were presented in part III of this book so as to analyze memory retrieval in a network of different neurons.

Example: Spiking neurons without adaptation

In the absence of adaptation, the membrane potential depends only on the input potential and the time since the last spike. Thus, Eq. (17.33) reduces to

| (17.39) |

where denotes the last firing time of neuron and summarizes the effect of refractoriness. Under the assumption of an initial overlap with pattern and no overlap with other patterns, the input potential is given by Eq. (17.38). Thus, the network of splits into an ‘ON’ population with input potential

| (17.40) |

and an ‘OFF’ population with input potential

| (17.41) |

For each of the populations, we can write down the integral equation of the population dynamics that we have seen in Chapter 14. For example, the ‘ON’-population evolves according to

| (17.42) |

with

| (17.43) |

where . An analogous equation holds for the ‘OFF’-population.

Finally, we use Eq. (17.36) to close the system of equations. The sum over all neurons can be split into one sum over the ‘ON’-population and another one over the ‘OFF’-population, of size and , respectively. If the number of neurons is large, the overlap therefore is

| (17.44) |

Thus, the retrieval of pattern is controlled by a small number of macroscopic equations.

In an analogous sequence of calculations one needs to check that the overlap with the other patterns (with ) does not increase during retrieval of pattern .

|

|

17.3.2 Excitatory and inhibitory neurons

Synaptic weights in the Hopfield model can take both positive and negative values. However, in the cortex, all connections originating from the same presynaptic neuron have the same sign, either excitatory or inhibitory. This experimental observation, called Dale’s law, gives rise to a primary classification of neurons as excitatory or inhibitory.

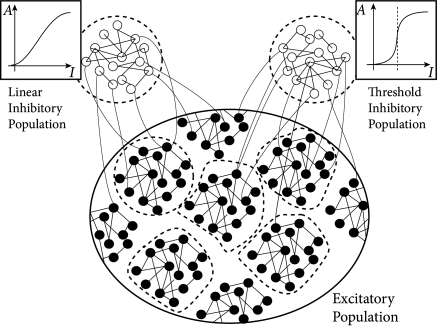

In Chapter 16 we started with models containing separate populations of excitatory and inhibitory neurons, but could show that the model dynamics are, under certain conditions, equivalent to an effective network where the excitatory populations excite themselves but inhibit each other. Thus explicit inhibition was replaced by an effective inhibition. Here we take the inverse approach and transform the effective mutual inhibition of neurons in the Hopfield network into an explicit inhibition via populations of inhibitory neurons.

In order to keep the arguments transparent, let us stick to discrete time and work with random patterns with mean activity . We take weights and introduce a discrete-time spike variable so that can be interpreted as a spike and as the quiescent state. Under the assumption that each pattern has exactly entries with , we find that the input potential can be rewritten with the spike variable

| (17.45) |

In the following we choose and . Then the first sum on the right-hand side of Eq. (17.45) describes excitatory and the second one inhibitory interactions.

In order to interpret the second term as arising from inhibitory neurons, we make the following assumptions. First, inhibitory neurons have a linear gain function and fire stochastically with probability

| (17.46) |

where the constant takes care of the units and is the index of the inhibitory neuron with . Second, each inhibitory neuron receives input from excitatory neurons. Connections are random and of equal weight . Thus, the input potential of neuron is where is the set of presynaptic neurons. Third, the connection from an inhibitory neuron back to an excitatory neuron has weight

| (17.47) |

Thus, inhibitory weights onto a neuron which participates in many patterns are stronger than onto one which participates in only a few patterns. Fourth, the number of inhibitory neurons is large. Taken together, the four assumptions give rise to an average inhibitory feedback to each excitatory neuron proportional to . In other words, the inhibition caused by the inhibitory population is equivalent to the second term in Eq. (17.45).

Because of our choice , patterns are only in weak competition with each other and several patterns can become active at the same time. In order to also limit the total activity of the network, it is useful to add a second pool on inhibitory neurons which turn on whenever the total number of spikes in the network surpasses . Note that biological cortical tissue contains many different types of inhibitory interneurons which are thought to play different functional roles.

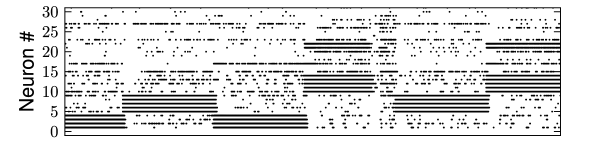

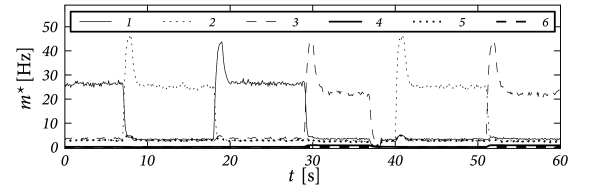

Fig. 17.11 shows that the above argument carry over to the case of integrate-and-fire neurons in continuous time. We emphasize that the network of 8000 excitatory and two groups inhibitory neurons (2000 neurons each) has stored 90 patterns of activity . Therefore each neuron participates in many patterns (111).