12.4 From Microscopic to Macroscopic

In this section we will give a first example of how to make the transition from the properties of single spiking neurons to the population activity in a homogeneous group of neurons. We focus here on stationary activity.

In order to understand the dynamic response of a population of neurons to a changing stimulus, as well as for an analysis of the stability of the dynamics with respect to oscillations or perturbations, we will need further mathematical tools to be developed in the next two chapters. As we will see in Chapters 13 and 14, the dynamics depends, apart from the coupling, also on the specific choice of neuron model. However, if we want to predict the level of stationary activity in a large network of neurons, that is, if we do not worry about the temporal aspects of population activity, then the knowledge of the single-neuron gain function (f-I curve, or frequency-current relation) is completely sufficient to predict the population activity.

| A | B |

|---|---|

|

|

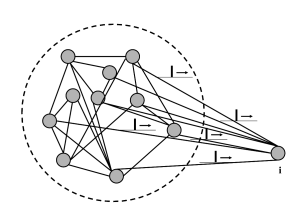

The basic argument is the following (Fig. 12.13). In a homogeneous population, each neuron receives input from many others, either from the same population, or from other populations, or both. Thus, a single neuron takes as its input a large (and in the case of a fully connected network even a complete) sample of the momentary population activity . This has been made explicit in Eq. (12.4) for a single population and in Eq. (12.11) for multiple populations. To keep the arguments simple, we focus in the following on a single fully connected population. In a homogeneous population, no neuron is different from any other one, so that all neurons in the network receive the same input.

Moreover, under the assumption of stationary network activity, the neurons can be characterized by a constant mean firing rate. In this case, the population activity must be directly related to the constant single-neuron firing rate . We show in Section 12.4.2, that, in a homogeneous population, the two are in fact equal: . We emphasize that the argument sketched here and in the next paragraphs is completely independent of the choice of neuron model and holds for detailed biophysical models of the Hodgkin-Huxley type just as well as for an adaptive exponential integrate-and-fire model or a spike response model with escape noise. The argument for the stationary activity will now be made more precise.

12.4.1 Stationary activity and asynchronous firing

We define asynchronous firing of a neuronal population as a macroscopic firing state with constant activity . In this section we show that in a homogeneous population such asynchronous firing states exist and derive the value from the properties of a single neuron. In fact, we will see that the the only relevant single-neuron property is its gain function, i.e. its mean firing rate as a function of input. More specifically, we will show that the knowledge of the gain function of a single neuron and the coupling parameter is sufficient to determine the activity during asynchronous firing.

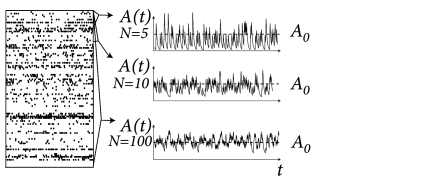

At a first glance it might look absurd to search for a constant activity , because the population activity has been defined in Eq. (12.1) as a sum over -functions. Empirically the population activity is determined as the spike count across the population in a finite time interval or, more generally, after smoothing the -functions of the spikes with some filter. If the filter is kept fixed, while the population size is increased, the population activity in the stationary state of asynchronous firing approaches the constant value (Fig. 12.14). This argument will be made more precise below.

12.4.2 Stationary Activity as Single-Neuron Firing Rate

The population activity is equal to the mean firing rate of a single neuron in the population. This result follows from a trivial counting argument and can best be explained by a simple example. Suppose that in a homogeneous population of 1 000 neurons we observe over a time a total number of 25’000 spikes. Under the assumption of stationary activity the total number of spikes is so that the population firing rate is Hz. Since all 1 000 neurons are identical and receive the same input, the total number of 25’ 000 spikes corresponds to 25 spikes per neuron, so that the firing rate (spike count divided by measurement time) of a single neuron is Hz. Thus .

More generally, the assumption of stationarity implies that averaging over time yields, for each single neuron, a good estimate of the firing rate . The assumption of homogeneity implies that all neurons in the population have the same parameters and are statistically indistinguishable. Therefore a spatial average across the population and the temporal average give the same result:

| (12.17) |

where the index refers to the firing rate of a single, but arbitrary neuron.

For an infinitely large population, Eq. (12.17) gives a formula to predict the population activity in the stationary state. However, real populations have a finite size and each neuron in the population fires at moments determined by its intrinsic dynamics and possibly some intrinsic noise. The population activity has been defined in Eq. (12.1) as an empirically observable quantity. In a finite population, the empirical activity fluctuates and we can, with the above arguments, only predict its expectation value

| (12.18) |

The neuron models discussed in Parts I and II enable us to calculate the mean firing rate for a stationary input, characterized by a mean and, potentially, fluctuations or noise of amplitude . The mean firing rate is given by the gain function

| (12.19) |

where the subscript is intended to remind the reader that the shape of the gain function depends on the level of noise (see Section 12.2.2). Thus, considering the pair of equations (12.18) and (12.19), we may conclude that the expected population activity in the stationary state can be predicted from the properties of single neurons.

Example: Theory vs. Simulation, Expectation vs. Observation

How can we compare the population activity calculated in Eq. (12.18) with simulation results? How can we check whether a population is in a stationary state of asynchronous firing? In a simulation of a population containing a finite number of spiking neurons, the observed activity fluctuates. Formally, the (observable) activity has been defined in Eq. (12.1) as a sum over functions. The activity predicted by the theory is the expectation value of the observed activity. Mathematically speaking, the observed activity converges for in the weak topology to its expectation value. More practically this implies that we should convolve the observed activity with a continuous test function before comparing with . We take a function with the normalization . For the sake of simplicity we assume furthermore that has finite support so that for or . We define

| (12.20) |

The firing is asynchronous if the averaged fluctuations decrease with increasing ; cf. Fig. 12.14.

In order to keep the notation light, we normally write in this book simply even in places where it would be more precise to write (the expected population activity at time , calculated by theory) or (the filtered population activity, derived from empirical measurement in a simulation or experiment). Only at places where the distinction between , , and is crucial, we use the explicit notation with bars or angle-signs.

12.4.3 Activity of a fully connected network

The gain function of a neuron is the firing rate as a function of its input current . In the previous subsection, we have seen that the firing rate is equivalent to the expected value of the population activity in the state of asynchronous firing. We thus have

| (12.21) |

The gain function in the absence of any noise (fluctuation amplitude ) will be denoted by .

Recall that the total input to a neuron of a fully connected population consists of the external input and a component that is due to the interaction of the neurons within the population. We copy Eq. (12.4) to have the explicit expression of the input current

| (12.22) |

Since the overall strength of the interaction is set by , we can impose a normalization . We now exploit the assumption of stationarity and set . The left-hand side is the filtered observed quantity which is in reality never exactly constant, but if the number of neurons in the network is sufficiently large, we do not have to worry about small fluctuations around . Note that here plays the role of the test function introduced in the previous example.

Therefore, the assumption of stationary activity combined with the assumption of constant external input yields a constant total driving current

| (12.23) |

Together with Eq. (12.21) we arrive at an implicit equation for the population activity ,

| (12.24) |

where is the noise-free gain function of single neurons and . In words, the population activity in a homogeneous network of neurons with all-to-all connectivity can be calculated if we know the single-neuron gain function and the coupling strength . This is the central result of this section, which is independent of any specific assumption about the neuron model.

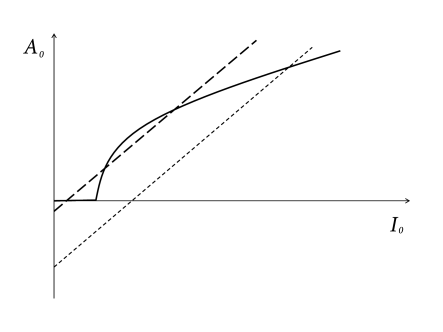

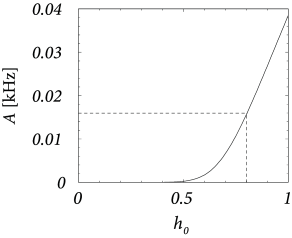

A graphical solution of Eq. (12.24) is indicated in Figure 12.15 where two functions are plotted: First, the mean firing rate as a function of the input (i.e., the gain function). Second, the population activity as a function of the total input (i.e., ; see Eq. (12.23)). The intersections of the two functions yield fixed points of the activity .

As an aside we note that the graphical construction is identical to that of the Curie-Weiss theory of ferromagnetism which can be found in any physics textbook. More generally, the structure of the equations corresponds to the mean-field solution of a system with feedback. As shown in Fig. 12.15, several solutions may coexist. We cannot conclude from the figure, whether one or several solutions are stable. In fact, it is possible that all solutions are unstable. In the latter case, the network leaves the state of asynchronous firing and evolves toward an oscillatory state. The stability analysis of the asynchronous state requires equations for the population dynamics, which will be discussed in Chapters 13 and 14.

The parameter introduced above in Eq. (12.24) implies, at least implicitly, a scaling of weights - as suggested earlier during the discussion of fully connected networks; cf. Eq. (12.6). The scaling with enables us to consider the limit of a large number of neurons: if we keep fixed the equation remains the same, even if increases. Because fluctuations of the observed population activity around decrease as increases, Eq. (12.24) becomes exact in the limit of .

Example: Leaky integrate-and-fire model with diffusive noise

We consider a large and fully connected network of identical leaky integrate-and-fire neurons with homogeneous coupling and normalized postsynaptic currents (). In the state of asynchronous firing, the total input current driving a typical neuron of the network is then

| (12.25) |

In addition, each neuron receives individual diffusive noise of variance that could represent spike arrival from other populations. The single-neuron gain function (476) in the presence of diffusive noise has already been stated in Chapter 8; cf. Eq. (8.54). We use the formula of the gain function to calculate the population activity

| (12.26) |

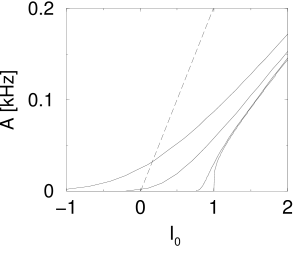

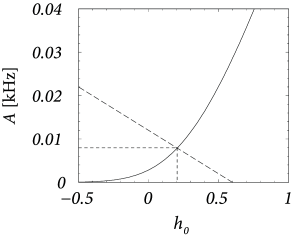

where with units of voltage measures the amplitude of the noise. The fixed points for the population activity are once more determined by the intersections of these two functions; cf. Fig. 12.16.

12.4.4 Activity of a randomly connected network

In the preceding subsections we have studied the stationary state of a large population of neurons for a given noise level. In Fig. 12.16 the noise was modeled explicitly as diffusive noise and can be interpreted as the effect of stochastic spike arrival from other populations or some intrinsic noise source inside each neuron. In other words, noise was added explicitly to the model while the input current to neuron arising from other neurons in the population was constant and the same for all neurons: .

In a randomly connected network (and similarly in a fully connected network of finite size), the summed synaptic input current arising from other neurons in the population is, however, not constant but fluctuates around a mean value , even if the population is in a stationary state of asynchronous activity. In this subsection, we discuss how to mathematically treat the additional noise arising from the network.

We assume that the network is in a stationary state where each neuron fires stochastically, independently, and at a constant rate , so that the firing of different neurons exhibits only chance coincidences. Suppose that we have a randomly connected network of neurons where each neuron receives input from presynaptic partners. All weights are set equal to .

We are going to determine the firing rate of a typical neuron in the network self-consistently as follows. If all neurons fire at a rate then the mean input current to neuron generated by its presynaptic partners is

| (12.27) |

where denotes the integral over the postsynaptic current and can be interpreted as the total electric charge delivered by a single input spike; cf. Section 8.2 in Chapter 8.

The input current is not constant but fluctuates with a variance given by

| (12.28) |

Thus, if neurons fire at constant rate , we know the mean input current and its variance. In order to close the argument we use the single-neuron gain function

| (12.29) |

which is supposed to be known for arbitrary noise levels . If we insert the mean from Eq. (12.27) and its standard deviation from Eq. (12.28), we arrive at an implicit equation for the firing rate which we need to solve numerically. The mean population activity is then .

We emphasize that the above argument does not require any specific neuron model. In fact, it holds for biophysical neuron models of the Hodgkin-Huxley type as well as for integrate-and-fire models. The advantage of a leaky integrate-and-fire model is that an explicit mathematical formula for the gain function is available. An example will be given below. But we can use Eqs. (12.27) - (12.29) just as well for a homogeneous population of biophysical neuron models. The only difference is that we have to numerically determine the single-neuron gain function for different noise levels (with noise of the appropriate autocorrelation) before starting to solve the network equations.

Please also note that the above argument is not restricted to a network consisting of a single population. It can be extended to several interacting populations. In this case, the expressions for the mean and variance of the input current contain contributions from the other populations, as well as from the self-interaction in the network. An example with interacting excitatory and inhibitory populations is given below.

The arguments that have been developed above for networks with a fixed number of presynaptic partners can also be generalized to networks with asymmetric random connectivity of fixed connection probability and synaptic scaling (15; 487; 91; 532; 487; 44).

Brunel network: excitatory and inhibitory populations

The self-consistency argument will now be applied to the case of two interacting populations, an excitatory population with neurons and an inhibitory population with neurons. The neurons in both populations are modeled by leaky integrate-and-fire neurons. For the sake of convenience, we set the resting potential to zero (). We have seen in Chapter 8 that leaky integrate-and-fire neurons with diffusive noise generate spike trains with a broad distribution of interspike intervals when they are driven in the sub-threshold regime. We will use this observation to construct a self-consistent solution for the stationary states of asynchronous firing.

We assume that excitatory and inhibitory neurons have the same parameters , , , and . In addition, all neurons are driven by a common external current . Each neuron in the population receives synapses from excitatory neurons with weight and synapses from inhibitory neurons with weight . If an input spike arrives at the synapses of neuron from a presynaptic neuron , its membrane potential changes by an amount if is excitatory and if is inhibitory. Here has units of electric charge. We set

| (12.30) |

Since excitatory and inhibitory neurons receive the same number of input connections in our model, we assume that they fire with a common firing rate . The total input current generated by the external current and by the lateral couplings is

| (12.31) | |||||

Because each input spike causes a jump of the membrane potential, it is convenient to measure the noise strength by the variance of the membrane potential (as opposed to the variance of the input). With the definitions of Chapter 8, we set where, from Eq. (8.42),

| (12.32) | |||||

The stationary firing rate of the population with mean input and variance is copied from Eq. (12.26) and repeated here for convenience

| (12.33) |

In a stationary state we must have . To get the value of we must therefore solve Eqs. (12.31) – (12.33) simultaneously for and . Since the gain function, i.e., the firing rate as a function of the input depends on the noise level , a simple graphical solution as in Fig. 12.15 is no longer possible. Numerical solutions of Eqs. (12.31) – (12.33) have been obtained by Amit and Brunel (21, 20). For a mixed graphical-numerical approach see Mascaro and Amit (332).

In the following paragraphs we give some examples of how to construct self-consistent solutions. For convenience we always set , , and ms and work with a unit-free current . Our aim is to find connectivity parameters such that the mean input to each neuron is and its variance .

Figure 12.17A shows that and correspond to a firing rate of Hz. We set , i.e., 40 simultaneous spikes are necessary to make a neuron fire. Inhibition has the same strength so that . We constrain our search to solutions with so that . Thus, on the average, excitation and inhibition balance each other. To get an average input potential of we therefore need a constant driving current .

To arrive at we solve Eq. (12.32) for and find . Thus for this choice of the parameters the network generates enough noise to allow a stationary solution of asynchronous firing at 16 Hz.

| A | B |

|---|---|

|

|

Note that, for the same parameters, the inactive state where all neurons are silent is also a solution. Using the methods discussed in this section we cannot say anything about the stability of these states. For the stability analysis see Chapter 13.

Example: Inhibition dominated network

About eighty to ninety percent of the neurons in the cerebral cortex are excitatory and the remaining ten to twenty percent inhibitory. Let us suppose that we have 8 000 excitatory and 2 000 inhibitory neurons in a cortical column. We assume random connectivity with a connection probability of ten percent and take , so that . As before, spikes arriving at excitatory synapses cause a voltage jump , i.e, an action potential can be triggered by the simultaneous arrival of 40 presynaptic spikes at excitatory synapses. If neurons are driven in the regime close to threshold, inhibition is rather strong and we take so that . Even though we have less inhibitory than excitatory neurons, the mean feedback is then dominated by inhibition since . We search for a consistent solution of Eqs. (12.31) – (12.33) with a spontaneous activity of Hz.

Given the above parameters, the variance is ; cf. Eq. (12.32). The gain function of integrate-and-fire neurons gives us for Hz a corresponding total potential of ; cf. Fig. 12.17B. To attain we have to apply an external stimulus which is slightly larger than since the net effect of the lateral coupling is inhibitory. Let us introduce the effective coupling . Using the above parameters we find from Eq. (12.31) .

The external input could, of course, be provided by (stochastic) spike arrival from other columns in the same or other areas of the brain. In this case Eq. (12.31) is to be replaced by

| (12.34) |

with the number of connections that a neuron receives from neurons outside the population, their typical coupling strength characterized by the amplitude of the voltage jump, and their spike arrival rate (21; 20). Due to the extra stochasticity in the input, the variance of the membrane voltage is larger

| (12.35) |

The equations (12.33), (12.34) and (12.35) can be solved numerically (21; 20). The analysis of the stability of the solution is slightly more involved (78; 79), and will be considered in Chapter 13.

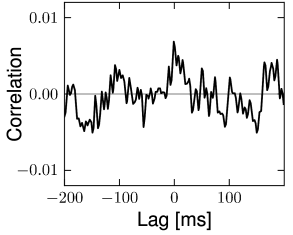

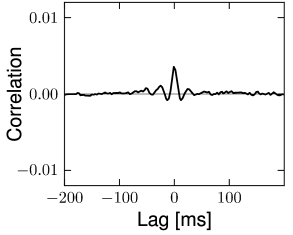

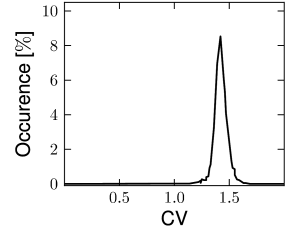

Example: Vogels-Abbott network

The structure of the network studied by Vogels and Abbott (537; 538; 68) is the same as that for the Brunel network: excitatory and inhibitory model neurons have the same parameters and are connected with the same probability within and across the two sup-populations. Therefore inhibitory and excitatory neurons fire with the same mean firing rate (see Section 12.4.4) and with hardly any correlations above chance level (Fig. 12.18). The two main differences to the Brunel network are: (i) the choice of random connectivity in the Vogels-Abbott network does not preserve the number of presynaptic partners per neuron so that some neurons receive more and others less than connections; (ii) neurons in the Vogels-Abbott network communicate with each other by conductance-based synapses. A spike fired at time causes a change in conductance

| (12.36) |

Thus, a synaptic input causes for a contribution to the conductance . The neurons are leaky integrate-and-fire units.

As will be discussed in more detail in Section 13.6.3 of the next chapter, the dominant effect of conductance based input is a decrease of the effective membrane time constant. In other words, if we consider a network of leaky integrate-and-fire neurons (with resting potential ), we may use again the Siegert-formula of Eq. (12.26)

| (12.37) |

in order to calculate the population activity . The main difference to the current-based model is that the mean input current and the fluctuations of the membrane voltage now also enter into the time constant . The effective membrane time constant in simulations of conductance-based integrate-and-fire neurons is sometimes four or five times shorter than the raw membrane time constant (126; 537; 538).

The Siegert formula holds in the limit of short synaptic time constants ( and ). The assumption of short time constants for the conductances is necessary, because the Siegert formula is valid for white noise, corresponding to short pulses. However, the gain function of integrate-and-fire neurons for colored diffusive noise can also be determined (154); see Section 13.6.4 of Chapter 13.

| A | B |

|---|---|

|

|

12.4.5 Apparent stochasticity and chaos in a deterministic network

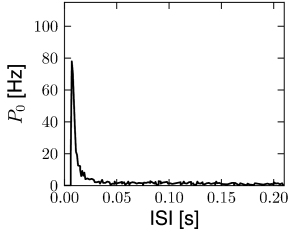

In this section we discuss how a network of deterministic neurons with fixed random connectivity can generate its own noise. In particular, we will focus on spontaneous activity and argue that there exist stationary states of asynchronous firing at low firing rates which have broad distributions of interspike intervals (Fig. 12.19) even though individual neurons are deterministic. The arguments made here have tacitly been used throughout Section 12.4.

| A | B |

|---|---|

|

|

Van Vreeswijk and Sompolinsky (1996, 1998) used a network of binary neurons to demonstrate broad interval distribution in deterministic networks. Amit and Brunel (21, 20) were the first to analyze a network of integrate-and-fire neurons with fixed random connectivity. While they allowed for an additional fluctuating input current, the major part of the fluctuations were in fact generated by the network itself. The theory of randomly connected integrate-and-fire neurons has been further developed by Brunel and Hakim (78). In a later study, Brunel (79) confirmed that asynchronous highly irregular firing can be a stable solution of the network dynamics in a completely deterministic network consisting of excitatory and inhibitory integrate-and-fire neurons. Work of Tim Vogels and Larry Abbott has shown that asynchronous activity at low firing rates can indeed be observed reliably in networks of leaky integrate-and-fire neurons with random coupling via conductance-based synapses (537; 538; 68). The analysis of randomly connected networks of integrate-and-fire neurons (79) is closely related to earlier theories for random nets of formal analog or binary neurons (15; 16; 17; 278; 368; 107; 91). However, the reset of neurons after each spike can be the cause of additional instabilities that have been absent in these earlier networks with analog or binary neurons.

Random connectivity of the network plays a central role in the arguments. We focus on randomness with a fixed number of presynaptic partners. Sparse connectivity means that the ratio

| (12.38) |

is a small number. Formally, we may take the limit of while keeping fixed. As a consequence of the sparse random network connectivity two neurons and share only a small number of common inputs. In the limit of the probability that neurons and have a common presynaptic neuron vanishes. Thus, if the presynaptic neurons fire stochastically, then the input spike trains that arrive at neuron and are independent (123; 278). In that case, the input of neuron and can be described as uncorrelated stochastic spike arrival which in turn can be approximated by a diffusive noise model; cf. Chapter 8. Therefore, in a large and suitably constructed random network, correlations between spiking neurons can be arbitrarily low (426); cf. Fig. 12.18.

Note that this is in stark contrast to a fully connected network of finite size where neurons receive highly correlated input, but the correlations are completely described by the time course of the population activity.