20.2 Oscillations: good or bad?

Oscillations are a prevalent phenomenon in biological neural systems and manifest themselves experimentally in electroencephalograms (EEG), recordings of local field potentials (LFP), and multi-unit recordings. Oscillations are thought to stem from synchronous network activity and are often characterized by the associated frequency peak in the Fourier spectrum. For example, oscillations in the range of 30-70Hz are called gamma-oscillations and those above 100Hz ‘ultrafast’ or ‘ripples’ ( 518; 85 ) . Among the slower oscillations, prominent examples are delta oscillations (1-4Hz) and spindle oscillations in the EEG during sleep (7-15Hz) ( 42 ) or theta oscillations (4-10Hz) in hippocampus and other areas ( 85 ) .

Oscillations are thought to play an important role in the coding of sensory information. In the olfactory system an ongoing oscillation of the population activity provides a temporal frame of reference for neurons coding information about the odorant ( 292 ) . Similarly, place cells in the hippocampus exhibit phase-dependent firing activity relative to a background oscillation ( 375; 85 ) . Moreover, rhythmic spike patterns in the inferior olive may be involved in various timing tasks and motor coordination ( 548; 261 ) . Finally, synchronization of firing across groups of neurons has been hypothesized to provide a potential solution to the so-called binding problem ( 479; 480 ) . The common idea across all the above examples is that an oscillation provides a reference signal for a ‘phase code’: the significance of a spike depends on its phase with respect to the global oscillatory reference; cf. Sect. 7.6 and Fig. 7.17 in Ch. 7 . Thus, oscillations are potentially useful for intricate neural coding schemes.

On the other hand, synchronous oscillatory brain activity is correlated with numerous brain diseases. For example, an epileptic seizure is defined as ’a transient occurrence of signs and/or symptoms due to abnormal excessive or synchronous neuronal activity in the brain’ ( 150 ) . Similarly, Parkinson’s disease is characterized by a high level of neuronal synchrony in the thalamus and basal ganglia ( 387 ) while neurons in the same areas fire asynchronously in the healthy brain ( 366 ) . Moreover, local field potential oscillations at theta frequency in thalamic or subthalamic nuclei is linked to tremor in human Parkinsonian patients, i.e. rhythmic finger, hand or arm movement at 3-6Hz ( 387; 504 ) . Therefore, in these and in similar situations, it seems to be desirable to suppress abnormal, highly synchronous oscillations so as to shift the brain back into its healthy state.

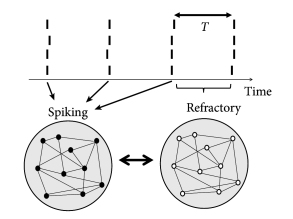

Simulations of the population activity in homogeneous networks typically exhibit oscillations when driven by a constant external input. For example, oscillations in networks of purely excitatory neurons arise because, as soon as some neurons in the network fire they contribute to exciting others. Once the avalanche of firing has run across the network, all neurons pass through a period of refractoriness, until they are ready to fire again. In this case the time scale of the oscillation is set by neuronal refractoriness (Fig. 20.4 A). A similar argument can be made for a homogeneous network of inhibitory neurons driven by a constant external stimulus. After a first burst by a few neurons, mutual inhibition will silence the population until inhibition wears off. Thereafter, the whole network fires again.

Oscillations also arise in networks of coupled excitatory and inhibitory neurons. The excitatory connections cause a synchronous bursts of the network activity leading to a build-up of inhibition which, in turn, suppresses the activity of excitatory neurons. The oscillation period in the two latter cases is therefore set by the build-up and decay time of inhibitory feedback (Fig. 20.4 B).

Even slower oscillations can be generated in ‘winner-take-all’ networks (cf. Chapter 16 ) with dynamic synapses (cf. Chapter 3 ) or adaptation (cf. Chapter 6 ). Suppose the networks consists of populations of excitatory neurons which share a common pool of inhibitory neurons. Parameters can be set such that excitatory neurons within the momentarily ‘winning’ population stimulate each other so as to overcome inhibition. In the presence of synaptic depression, however, the mutual excitation fades away after a short time, so that now a different excitatory population becomes the new ‘winner’ and switches on. As a result, inhibition arising from inhibitory neurons turns the activity of the previously winning group off, until inhibition has decayed and excitatory synapses have recovered from depression. The time scale is then set by combination of the time scales of inhibition and synaptic depression. Networks of this type have been used to explain the shift of attention from one point in a visual scene to the next ( 236 ) .

| A | B |

|---|---|

|

|

In this section, we briefly review mathematical theories of oscillatory activity (subsections 20.2.1 - 20.2.3 ) before we study the interaction of oscillations with STDP (subsection 20.2.4 ) The results of this section will form the basis for the discussion of Section 20.3 .

20.2.1 Synchronous Oscillations and Locking

Homogeneous networks of spiking neurons show a natural tendency toward oscillatory activity. In Sections 13.4.2 and 14.2.3 , we have analyzed the stability of asynchronous firing. In the stationary state the population activity is characterized by a constant value of the population activity. An instability of the dynamics with respect to oscillations at period , appears as a sinusoidal perturbation of increasing amplitude; see Fig. 20.5 A as well as Fig. 14.8 in Ch. 14 . The analysis of the stationary state shows that a high level of noise, network heterogeneity, or a sufficient amount of inhibitory plasticity all contribute to stabilizing the stationary state. The linear stability analysis, however, is only valid in the vicinity of the stationary state. As soon as the amplitude of the oscillations is of the same order of magnitude as , the solution found by linear analysis is no longer valid since the population activity cannot become negative.

Oscillations can, however, also be analyzed from a completely different perspective. In a homogeneous network with fixed connectivity in the limit of low noise we expect strong oscillations. In the following, we focus on the synchronous oscillatory mode where nearly all neurons fire in ‘lockstep’ (Fig. 20.5 B). We study whether such periodic synchronous burst of the population activity can be a stable solution of network equations.

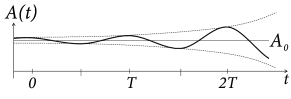

To keep the arguments simple, we consider a homogeneous population of identical SRM neurons (Ch. 6 and Sect. 9.3 in Ch. 9 ) which is nearly perfectly synchronized and fires almost regularly with period . In order to analyze the existence and stability of a fully locked synchronous oscillation we approximate the population activity by a sequence of square pulses , , centered around . Each pulse has a certain half-width and amplitude – since all neurons are supposed to fire once in each pulse; cf. Fig. 20.5 B. If we find that the amplitude of subsequent pulses increases while their width decreases (i.e., ), we conclude that the fully locked state in which all neurons fire simultaneously is stable.

In the examples below, we will prove that the condition for stable locking of all neurons in the population can be stated as a condition on the slope of the input potential at the moment of firing. More precisely, if the last population pulse occurred at about with amplitude the amplitude of the population pulse at increases, if :

| (20.4) |

If the amplitude of subsequent pulses increases, their width must decrease accordingly. In other words, we have the following Locking Theorem . In a homogeneous network of SRM neurons, a necessary and, in the limit of a large number of presynaptic neurons ( ), also sufficient condition for a coherent oscillation to be asymptotically stable is that firing occurs when the postsynaptic potential arising from all previous spikes in the population is increasing in time ( 179 ) .

| A | B |

|---|---|

|

|

Example: Perfect synchrony in network of inhibitory neurons

Locking in a population of spiking neurons can be understood by simple geometrical arguments. To illustrate this argument, we study a homogeneous network of identical SRM neurons which are mutually coupled with strength . In other words, the interaction is scaled with one over so that the total input to a neuron is of order one even if the number of neurons is large ( ). Since we are interested in synchrony we suppose that all neurons have fired simultaneously at . When will the neurons fire again?

Since all neurons are identical we expect that the next firing time will also be synchronous. Let us calculate the period between one synchronous pulse and the next. We start from the firing condition of SRM neurons

| (20.5) |

where is the postsynaptic potential. The axonal transmission delay is included in the definition of , i.e., for . Since all neurons have fired synchronously at , we set . The result is a condition of the form

| (20.6) |

since for . Note that we have neglected the postsynaptic potentials that may have been caused by earlier spikes back in the past.

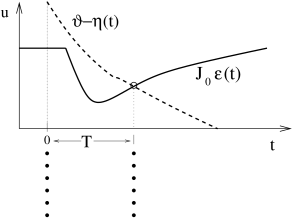

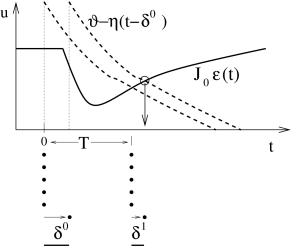

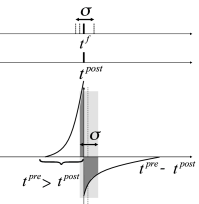

The graphical solution of Eq. ( 20.6 ) for the case of inhibitory neurons (i.e., ) is presented in Fig. 20.6 . The first crossing point of the effective dynamic threshold and defines the time of the next synchronous pulse.

What happens if synchrony at was not perfect? Let us assume that one of the neurons is slightly late compared to the others (Fig. 20.6 B). It will receive the input from the others, thus the right-hand side of Eq. ( 20.6 ) is the same. The left-hand side, however, is different since the last firing was at instead of zero. The next firing time is at where is found from

| (20.7) |

Linearization with respect to and yields then:

| (20.8) |

where we have exploited that neurons with ’normal’ refractoriness and adaptation properties have . From Eq. ( 20.8 ) we conclude that the neuron which has been late is ‘pulled back’ into the synchronized pulse of the others, if the postsynaptic potential is rising at the moment of firing at . Equation ( 20.8 ) is a special case of the Locking Theorem.

| A | B |

|---|---|

|

|

Example: Proof of locking theorem (*)

In order to check whether the fully synchronized state is a stable solution of the network dynamics, we exploit the population integral equation ( 14.5 ) of Ch. 14 and assume that the population has already fired a couple of narrow pulses for with widths , , and calculate the amplitude and width of subsequent pulses.

In order to translate the above idea into a step-by-step demonstration, we use

| (20.9) |

as a parameterization of the population activity; cf. Fig. 20.5 B. Here, denotes the Heaviside step function with for and for . For stability, we need to show that the amplitude of the rectangular pulses increases while the width of subsequent pulses decreases.

To prove the theorem, we assume that all neurons in the network have (i) identical refractoriness with for all ; (ii) identical shape of the postsynaptic potential; (iii) all couplings are identical, ; and (iv) all neurons receive the same constant external drive . The sequence of rectangular activity pulses in the past gives therefore rise to an input potential

| (20.10) |

which is identical for all neurons.

In order to determine the period , we consider a neuron in the center of the square pulse which has fired its last spike at . The next spike of this neuron must occur at , viz. in the center of the next square pulse. We use in the threshold condition for spike firing which yields

| (20.11) |

If a synchronized solution exists, ( 20.11 ) defines its period.

We now use the population equation of renewal theory, Eq. ( 14.5 ) in Ch. 14 . In the limit of low noise, the interval distribution becomes a -function: neurons that have fired fired at time fire again at time . Using the rules for calculation with -functions and the threshold condition (Eq. ( 20.11 )) for firing, we find

| (20.12) |

where the prime denotes the temporal derivative. is the ‘backward interval’: neurons that fire at time have fired their previous spike at time . According to our assumption . A necessary condition for an increase of the activity from one cycle to the next is therefore that the derivative is positive – which is the essence of the Locking Theorem.

The Locking Theorem is applicable in a large population of SRM neurons ( 179 ) . As discussed in Chapter 6 , the framework of SRM encompasses many neuron models, in particular the leaky integrate-and-fire model. Note that the above locking argument is a ‘local’ stability argument and requires that network firing is already close to the fully synchronized state. A related but global locking argument has been presented by ( 348 ) .

20.2.2 Oscillations with irregular firing

In the previous subsection, we have studied fully connected homogeneous network models which exhibit oscillations of the neuronal activity. In the locked state, all neurons fire regularly and in near-perfect synchrony. Experiments, however, show that though oscillations are a common phenomenon, spike trains of individual neurons are often highly irregular.

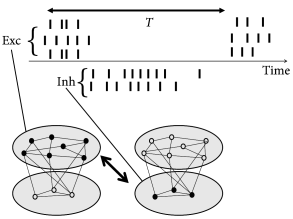

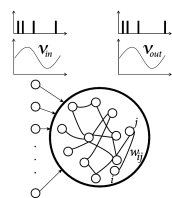

Periodic large-amplitude oscillation of the population activity are compatible with irregular spike trains if individual neurons fire at an average frequency that is significantly lower than the frequency of the population activity (Fig. 20.7 ). If the subgroup of neurons that is active during each activity burst changes from cycle to cycle, then the distribution of inter-spike intervals can be broad, despite a prominent oscillation. For example, in the inferior olivary nucleus, individual neurons have a low firing rate of one spike per second while the population activity oscillates at about 10 Hz. Strong oscillations with irregular spike trains have interesting implications for short-term memory and timing tasks ( 259 ) .

20.2.3 Phase Models

| A | B |

|---|---|

|

|

For weak coupling, synchronization and locking of periodically firing neurons can be systematically analyzed in the framework of phase models ( 282; 139; 275; 397 ) .

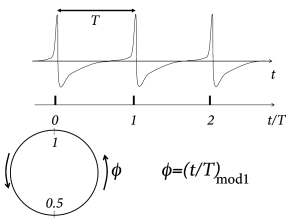

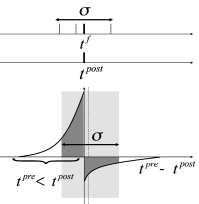

Suppose a neuron driven by a constant input fires regularly with period , i.e., it evolves on a periodic limit cycle. We have already seen in Ch. 4 that the position on the limit cycle can be represented by a phase . In contrast to Ch. 4 , we adopt here the conventions that (i) spikes occur at phase (Fig. 20.8 ) and (ii) between spikes the phase increases from zero to one at a constant speed , where is the frequency of the periodic firing. In more formal terms, the phase of an uncoupled neural ‘oscillator’ evolves according to the differential equation

| (20.13) |

and we identify the value 1 with zero. Integration yields where ‘mod ’ means ‘modulo 1’. The phase represents the position on the limit cycle (Fig. 20.8 A).

Phase models for networks of interacting neurons are characterized by the intrinsic frequencies of the neurons ( ) as well as the mutual coupling. For weak coupling, the interaction can be directly formulated for the phase variables

| (20.14) |

where is the overall coupling strength, are the relative pairwise coupling, and the phase coupling function. For pulse-coupled oscillators, an interaction from neuron to neuron happens only at the moment when the presynaptic neuron emits a spike. Hence the phase coupling function is replaced by

| (20.15) |

where are the spike times of the presynaptic neuron, defined by the zero-crossings of , i.e., . The function is the ‘phase response curve’: the effect of an input pulse depends on the momentary state (i.e. the phase ) of the receiving neuron (see the following example).

For neurons with synaptic currents of finite duration, phase coupling is not restricted to the moment of spike firing ( ) of the presynaptic neuron, but extends also to phase values . The phase coupling can be positive or negative. Positive values of lead to a phase advance of the postsynaptic neuron. Phase models are widely used to study synchronization phenomena ( 397 ) .

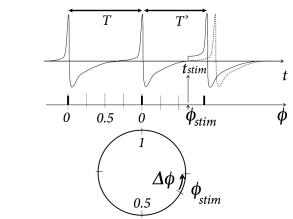

Example: Phase response curve

The idea of a phase response curve is illustrated in Fig. 20.8 B. A short positive stimulating input pulse of amplitude perturbs the period of an oscillator from its reference value to a new value which might be shorter or longer than ( 87; 555 ) . The phase response curve measures the phase advance as a function of the phase at which the stimulus was given.

Knowledge of the stimulation phase is, however, not sufficient to characterize the effect on the period, because a stimulus of amplitude is expected to cause a larger phase shift than a stimulus of amplitude . The mathematically relevant notion is therefore the phase advance, divided by the (small) amplitude of the stimulus. More precisely, the infinitesimal phase response curve is defined as

| (20.16) |

The infinitesimal phase response curve can be extracted from experimental data ( 201 ) and plays an important role in the theory of weakly coupled oscillators.

Example: Kuramoto model

The Kuramoto model ( 282; 8 ) describes a network of phase oscillators with homogeneous all-to-all connections and a sinusoidal phase coupling function

| (20.17) |

where is the intrinsic frequency of oscillator . For the analysis of the system, it is usually assumed that both the coupling strength and the frequency spread are small. Here denotes the mean frequency.

If the spread of intrinsic frequencies is zero, then an arbitrary small coupling synchronizes all units at the same phase . This is easy to see. First, synchronous dynamics for all are a solution of Eq. ( 20.17 ). Second, if one of the oscillators is late by a small amount, say oscillator has a phase , then the interaction with the others makes it speed up (if the phase difference is smaller than ) or slow down (if the phase difference is larger than , until it is synchronized with the group of other neurons. More generally, for a fixed (small) spread of intrinsic frequencies, there is a minimal coupling strength above which global synchronization sets in.

We note that, in contrast to pulse-coupled models, units in the Kuramoto model can interact at arbitrary phases.

20.2.4 Synaptic plasticity and oscillations

| A | B | C |

|---|---|---|

|

|

|

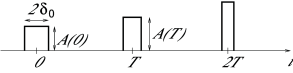

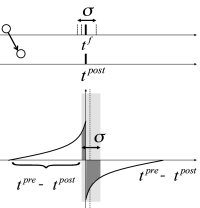

During an oscillation a large fraction of excitatory neurons fires near-synchronously (Fig. 20.4 ). What happens to the oscillation if the synaptic efficacies between excitatory neurons are not fixed but subject to spike-timing dependent plasticity (STDP)? In this subsection we sketch some of the theoretical arguments ( 309; 395 )

In Fig. 20.9 near synchronous spikes in a pair of pre- and postsynaptic neurons are shown together with a schematic STDP window; cf. Sect. 19.1.2 in Ch. 19 . Note that the horizontal axis of the STDP window is the difference between the spike arrival time at the presynaptic terminal and the spike firing time of the postsynaptic neuron. This choice (where the presynaptic spike arrival time is identified with the onset of the EPSP) corresponds to one option, but other choices ( 328; 483 ) are equally common. With our convention, the jump from potentiation to depression occurs if postsynaptic firing coincides with presynaptic spike arrival. However, because of axonal transmission delays synchronous firing leads to spike arrival that is delayed with respect to the postsynaptic spike. Therefore, consistent with experiments ( 483 ) , synchronous spike firing with small jitter leads, at low repetition frequency, to a depression of synapses (Fig. 20.9 A). Lateral connections within the population of excitatory neurons are therefore weakened ( 309 ) .

However, the shape of the STDP window is frequency dependent with a marked dominance of potentiation at high repetition frequencies ( 483 ) . Therefore, near-synchronous firing with a large jitter leads to a strengthening of excitatory connections (Fig. 20.9 C) in the synchronously firing group ( 395 ) . In summary, synchronous firing and STDP tightly interact.

Example: Bistability of plastic networks

Since we are interested in the interaction of STDP with oscillations, we focus on a recurrent network driven by periodically modulated spike input (Fig. 20.10 A). The lateral connection weights from a presynaptic neuron to a postsynaptic neuron are changed according to Eq. ( 19.2.2 ) of Chapter 19 , which we repeat here for convenience

| (20.18) |

where and denote the spike trains of pre- and postsynaptic neurons, respectively. The time course of the STDP window is given by and and are non-Hebbian contributions, i.e., an isolated presynaptic or postsynaptic spike causes a small weight change, even if it is not paired with activity of the partner neuron. Non-Hebbian terms are linked to ‘homeostatic’ or ‘heterosynaptic’ plasticity and are useful to balance weight growth caused by Hebbian terms (Ch. 19 ). The amplitude factors are given by soft-bounds analogous to Eq. 19.4 :

| (20.19) | |||||

| (20.20) |

with . An exponent close to zero implies that there is hardly any weight dependence except close to the bounds at zero and .

The analysis of the network dynamics in the presence of STDP ( 395; 187; 256 ) shows that the most relevant quantities are (i) the integral over the STDP window evaluated at a value far away from the bounds; (ii) the Fourier transform of the STDP window at the frequency where is the period of the oscillatory drive; (iii) the sum of the non-Hebbian terms .

Oscillations of brain activity in the or frequency band are relatively slow compared to the time scale of STDP. If we restrict the analysis to oscillations with a period that is long compared to the time scale of the learning window, the Fourier transform of the STDP window mentioned in (ii) can be approximated by the integral mentioned in (i). Note that slow sinusoidal oscillations correspond to a large jitter of spike times (Fig. 20.9 C).

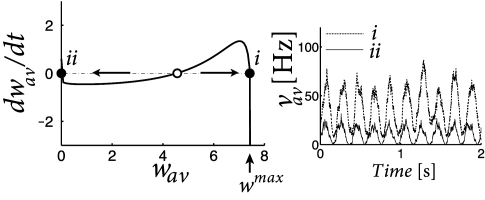

Pfister and Tass ( 395 ) found that the network dynamics is bistable if the integral over the learning window is positive (which causes an increase of weights for uncorrelated Poisson firing), but weight increase is counterbalanced by weight decrease caused by homeostatic terms in the range with suitable negative constants and . Therefore, for the same periodic stimulation stimulation paradigm, the network can be in either a stable state where the average weight is close to zero, or in a different stable state where the average weight is significantly positive (Fig. 20.10 B). In the latter case, the oscillation amplitude in the network is enhanced (Fig. 20.10 C).

| A | B | C |

|---|---|---|

|

|

|